Beautifulsoup Response Page Status

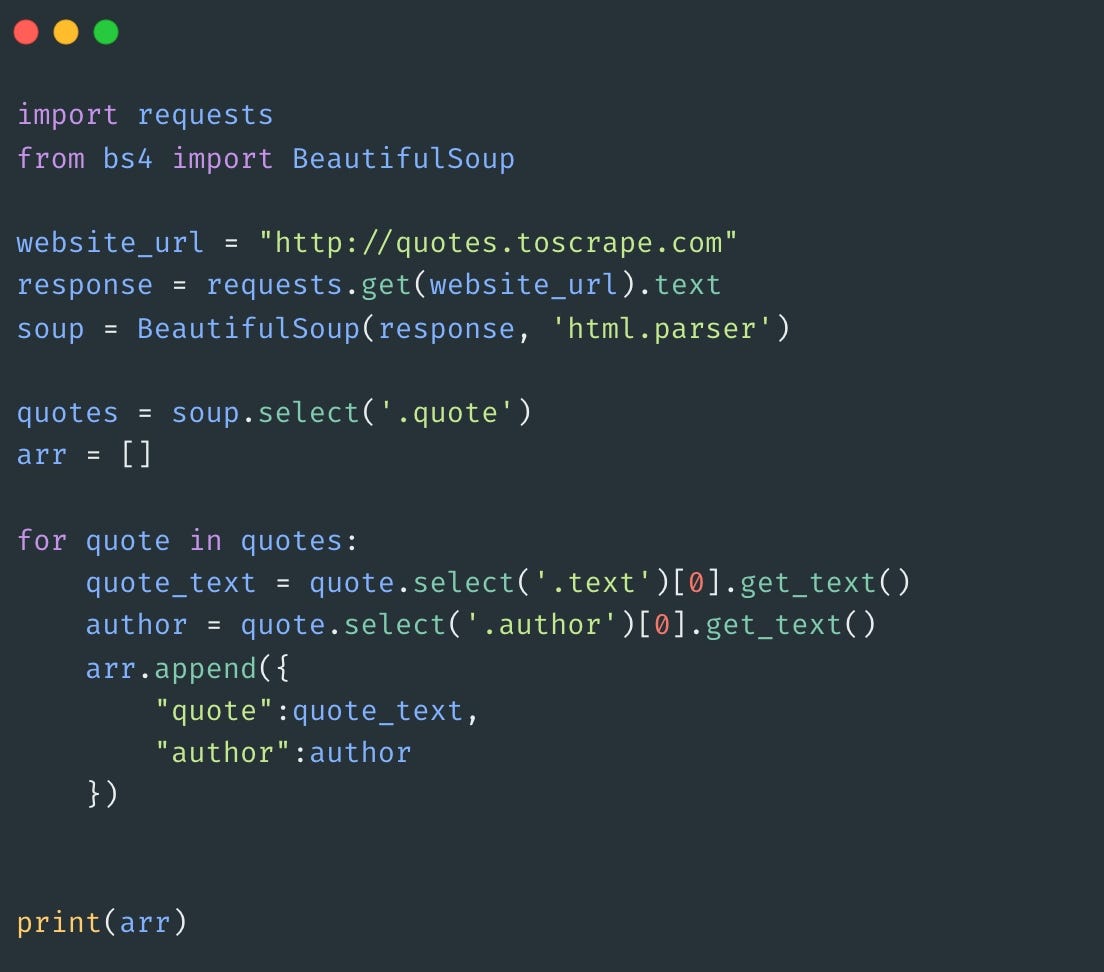

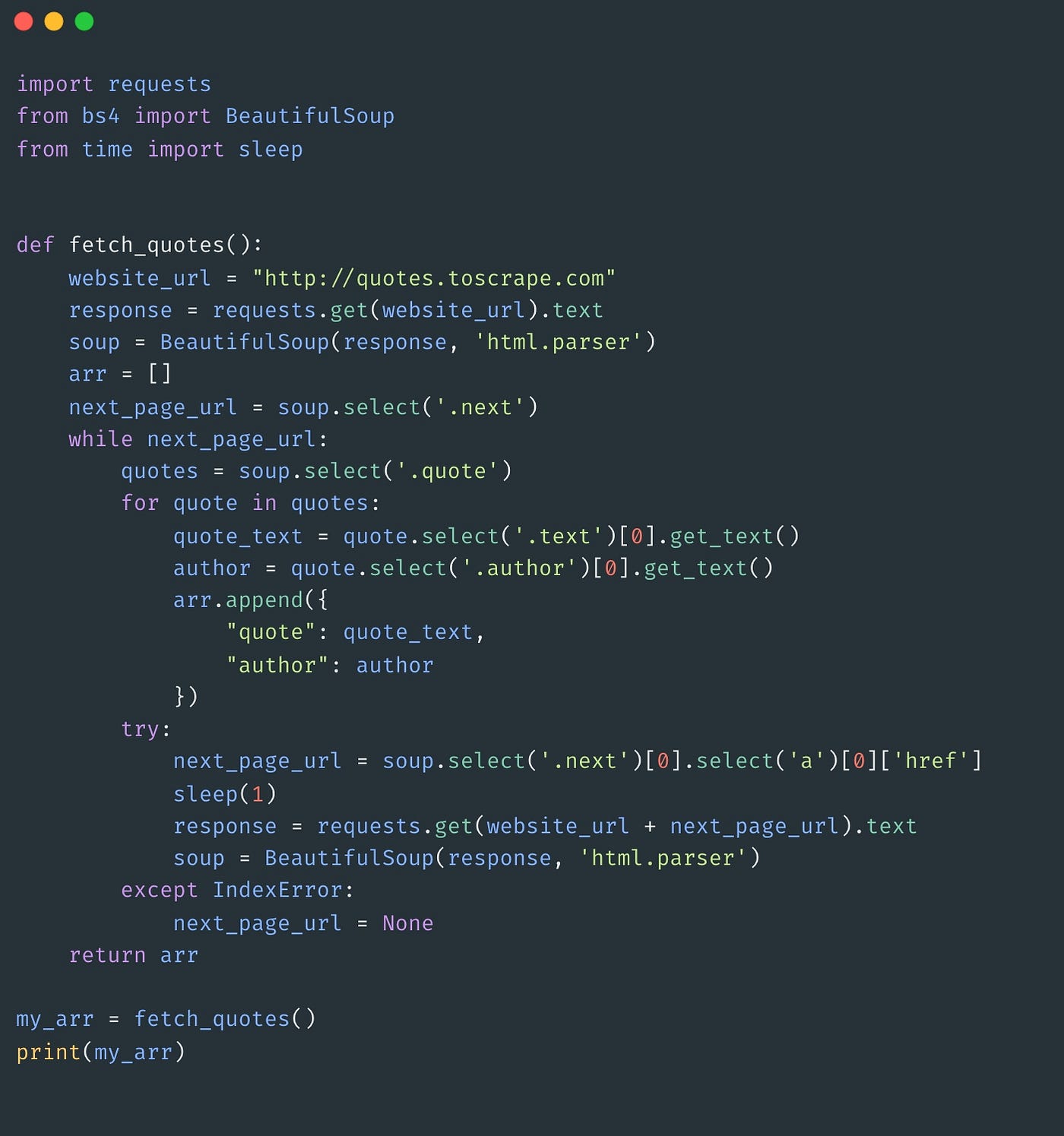

We use a module called requests which has a method get that returns a response containing page content status etc we save the response to an object page and extract the page content from the object using the pagetext method and use beautiful soup to parse the document in html using pythons inbuilt html parser so that we can access the data. Python Convert Unicode to Bytes ASCII UTF-8 Raw String.

Python Beautifulsoup Can T Find Div With Specific Class Stack Overflow

The find method returns the first matching case from the soup object.

Beautifulsoup response page status. Text htmlparser links soup. Content htmlparser To structure the code lets create a new function get_dom Document Object Model that includes all of the preceding code. Soup BeautifulSoup pagetext htmlparser To show the contents of the page on the terminal we can print it with the prettify method in order to turn the Beautiful Soup parse tree into a nicely formatted Unicode string.

In the above example we have used the find class. For a better understanding let us follow a few guidelinessteps that will help us to simplify things and produce an efficient code. Success proceed otherwise exit.

On line 7 we are calling the raise_for_status method which will return an HTTPError if the HTTP request returned an unsuccessful status code. The requestsstatus_code provides us with a response that indicates whether or not the HTTP communication between our code and the website was a success or a failure. If our site is available we want to get the response code 200.

Response requestsget url headersheaders if responsestatus_code 200. From bs4 import BeautifulSoup soup BeautifulSoup. In the real world it is often used for web scraping projects.

Check status code for response received success code - 200 printr Parsing the HTML soup BeautifulSoup rcontent htmlparser s soupfind div class_entry-content prints Output. If yes that means the. Pass the URL in the get function UDF so that it will pass a GET request to a URL and it will return a response.

Note that we are going to use python decerators here to make the. We going to use the variable page we used before and we are going to convert it using str method. BeautifulSoup is not a web scraping library per se.

The status we may receive. Next we need to decompose this string into a Python representation of the page using BeautifulSoup. Calling another script using subprocesscall method in python script - Designing Pipelines.

If it isnt available we will get the response code 404. First collect the username from command line and then send the request to twitter page. This class will find the given tag with the given attribute.

200 A status of 200 implies a successful request. Feb 2 2021. Our code like this response_code str pagestatus_code Furthermore our application needs to display the URL text itself.

Requestsget url args Now Parse the HTML content using bs4. Python In-Place Subtraction Operator. List of standard HTTP responses and their definitions are listed here.

If there is no exception and status code returned in response is 200 ie. Well lets start by looking at what it is. 1 2 3 4 soup bs4.

Rstatus_code output 200 Beautifulsoup allows us to parse the response of web pages using HTML or XML parsing. To ensure that we received a good request we use an if clause on the status_code attribute to ensure we received a 200 status. Parsing Our Request Data with Beautiful Soup.

It is a library that allows you to efficiently and easily pull out information from HTML. Now lets parse the content as a BeautifulSoup object to extract the title and header tags of the website as for this article and to replace it in the original soup variable. In the loop we loop through all the link s and get the href attribute of each link with attr.

Print soupprettify This will render each HTML tag on its own line. In this guide well see how you can easily use ScraperAPI with the Python Request library to scrape the web at scale. One way would be to check the status code of the request and see if you received a partial content response 206.

Convert Local datetime to UTC timezone in Python. From bs4 import BeautifulSoup soup BeautifulSoup r. Import all the required modules.

Show activity on this post. Text class str Well we at least know that we. Print links to text file.

Convert the response text into BeautifulSoup object and see if there is any div tag in the HTML with class errorpage-topbar. Automatically catch and retry failed requests returned by ScraperAPI. Now Ive truncated the response because theres actually a lot of text making up my websites homepage and I didnt want to make you see all of it.

We will walk you through exactly how to create a scraper that will. In this tutorial we will explore numerous examples of using the BeautifulSoup library in Python. So to begin well need HTML.

Using Python on Windows. So now that we have this requests data in rtext how do we start working with it. We will pull out HTML from the HackerNews landing page using the requests python package.

Send requests to ScraperAPI using our API endpoint Python SDK or proxy port. Soup BeautifulSoup responsedata partial_data. Understand What is Mutable and Immutable in Python.

And we get the anchor elements by setting the parse_only argument to SoupStrainer a.

Scrap A Web Page In 20 Lines Of Code With Python And Beautifulsoup By Kashif Aziz Medium

Python 3 X Beautifulsoup Returning 403 Error For Some Sites Stack Overflow

Using Tuples In Python Python Is A Programming Language That By Yancy Dennis Mar 2022 In 2022 Data Scientist Programming Languages Python

Python Web Scraping Using Beautifulsoup Njkhanh

Python How Can I Use Beautiful Soup To Scrape The Symbol From This Webpage Stack Overflow

Posting Komentar untuk "Beautifulsoup Response Page Status"